Google’s New AI Approach to Improve Mobile Machine Learning Models

Applications that detect objects, classify images, and recognize faces are quite universal today, and most of our smartphones and other mobile devices feature one or more of such applications. As many of know, the AI functions mentioned above are powered by Convolutional Neural Networks or CNN, which is a class of deep artificial neural networks, widely used to analyze visual imagery. However, designing CNNs for mobile devices still remains a challenge, since mobile Machine Learning models need to be small, fast and accurate. Though several experiments were conducted to improve the mobile Machine Learning models, manual creation of efficient models is tough considering multiple architectural possibilities.

In its recent paper titled, “MnasNet: Platform-Aware Neural Architecture Search for Mobile”, the research team from Google explores and discusses an automated neural architecture search approach, which incorporates reinforcement learning to redesign mobile Machine Learning models. The automated system named as MnasNet will deal with mobile speed constraints by openly integrating the speed information into the main reward function of the search algorithm. This helps the search to identify a model that attains the best trade-off between accuracy and speed.

Instead of considering the model speed via a proxy (as done previously), the proposed architecture search approach directly measures the model speed by executing the model on a particular platform. Understanding that every mobile device has its own software and hardware characteristics that require different architectures to efficiently balance speed and accuracy, this new “platform-aware architecture search approach” for mobile will help you directly measure what is possible in the real-world.

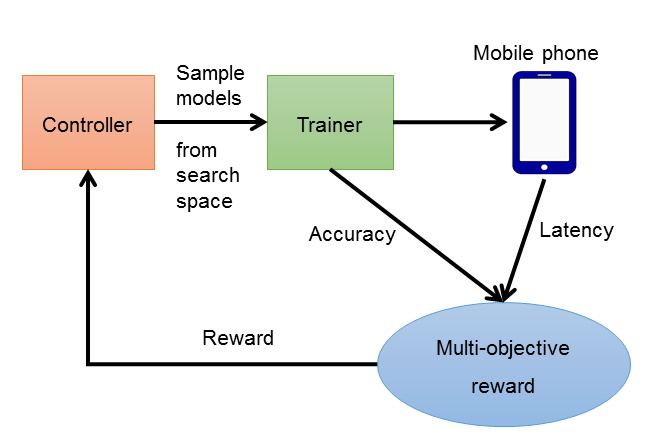

The approach flow consists of three components:

- A RNN-based (recurrent neural network) controller that learns and creates samples of model’s architectures

- A trainer that builds and trains models to obtain the accuracy

- An inference engine that uses TensorFlow Lite to measure the model’s speed on mobile phones in real-time.

Fig: Automated neural search architecture approach for mobile

Google’s research team has tested the effectiveness of this approach on the following datasets:

-

ImageNet, an image database maintained by Stanford and Princeton, and

-

Common Objects in Context (COCO) object recognition dataset.

The results reveal that these Machine Learning models are 1.5x faster than the mobile model MobileNetV2 and 2.4x faster than NASNet, that also uses a neural architecture search system. On COCO object detection, the models have secured both higher accuracy and higher speed, more than MobileNet. The new automated approach can achieve state-of-the-art performance on multiple complex mobile vision tasks. Google has plans to incorporate more operations and optimizations into the search space, and apply it to more mobile vision tasks such as semantic segmentation.

We can help!

Mercedes Benz Engine In A Second Hand 15-year-old Economic Car

#Artificialintelligence

One Functional Prototype Is Better Than A Thousand Wireframes

#Artificialintelligence

Unlocking The Potential Of Ai In Business Process Outsourcing

#Artificialintelligence