Function Calling in LLMs

LLMs, now a days, can do much more than answering a question based on a pre-trained knowledgebase or preparing a routine document / image/ video. It can extract information from a custom function or an API or a database and prepare a document based on it. This information is not part of the data with which the LLM is trained and mostly it is some live data like “the price of an air ticket from Newyork to London on a future date in a specific airplane service”. LLMs interact with many types of external APIs to get such information. One way to make it possible is by using Function Calling.

Suppose we already have an API for searching airfares, seat availability as well as for booking air services. We can interface this with an LLM. As we know LLMs are very good language processors and can extract keywords like from and to airports, dates etc. from a natural language query. Our system is already capable of searching for airfares and availability etc. Why don’t we combine these two great skills and give a much better experience to the user? It is at this context, Function Calls come into picture.

Life Cycle of a function call

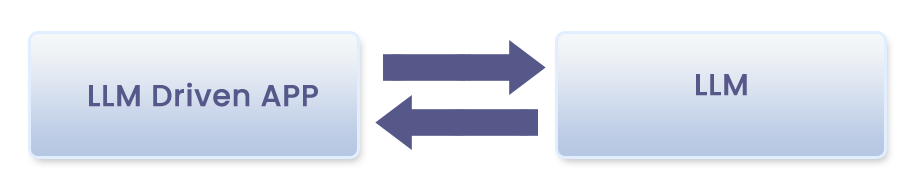

Fig1.

Fig1.

In the above picture, LLM Driven App (LDA) is our application which contains the above-mentioned Flight Service API and an LLM interface.

Step 1: LDA takes the user query in natural language. It may be something like “What is the airfare from Newyork to London on 4th of next month?”. LDA will pass this query to LLM along with a list of its own functions. Some sample such functions are get_air_fares, get_seat_availability, book_seat etc. Each such function will have some mandatory parameters and some optional parameters. For e.g., get_air_fares function may have from_airport, to_airport & date parameters as mandatory. It may have some optional parameters as well like num_adults, num_children, num_kids, specific_airline_service_name, time_of_journey etc. The function list so passed to the LLM has well defined description for each function and each parameter. LLM, with its amazing language processing skills will identify which of these functions should be used for getting necessary information. For e.g., in the above case, LLM will identify the function to be used as get_air_fares. It will extract the needed parameter values as well.

Step 2: LLM will return the identified function along with its parameter values to the LDA.

Step 3: LDA will call the function using the given parameter values. The result will be available in JSON format.

Step 4: The result in JSON format is passed to LLM. LLM will extract necessary information from the JSON message and create a message in natural language and return it to LDA.

Step 5: LDA will display the final response from LLM.

From these steps, we can see that the LLM is not directly calling the function / API. Function call is made by our own LDA app. This is because:

LLMs are Stateless: LLMs can’t store the state of the LDA, for example, the logged-in persona of LDA / API Key etc. If LLMs were designed with stateful capabilities, it will lead to many scalability & security issues. Separation of Concerns: LLMs are meant to be language processors alone. They are not meant to call APIs etc.

Security Concerns: To run functions in a secure way, we need many parameters (other than the ones given by LLM to LDA) like token key, API Key etc. Passing them between LDA & LLM has got many security issues. Error Handling & Logging: If LLM is to call the function, it will have to handle errors from the functions. Also, logging will be an issue.

A bit of Code ...

Let us see a piece of code written in Python containing a function call.

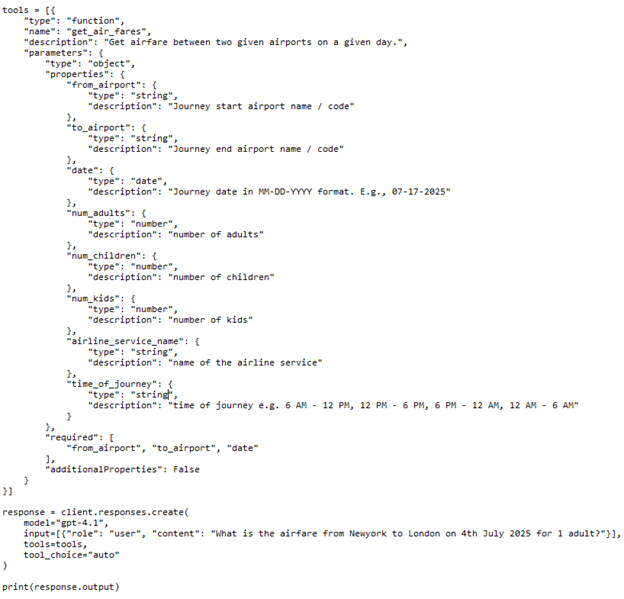

Fig2.

Fig2.

We are defining a variable – tools which contains the function list (in the above figure, there is only one function. But any number of functions can be there). We are passing this list to the LLM call – client.responses.create.

There is also a “tool_choice” parameter we are passing to LLM call. This advice LLM whether any tool should be used. Here the value used is “auto” which means, LLM can take decision whether to use a tool or not. Other possible values are none & required. Their meanings are obvious.

Batch Function Calls

LLM can suggest a batch function call as well. For example, let the query be:

What are the airfares from Newyork to London on 4th Jul 2025 and from London to Paris on 6th Jul 2025 for 1 adult?

Here get_air_fares need be called two times with different sets of parameters. LLM will identify both these calls and extract correct parameters for each call. In some other applications, multiple different calls using different functions may also be needed. For e.g., let the query be:

Please give airfare and availability from Newyork to London on 4th Jul 2025 for 1 adult.

This needs to call both get_air_fares function & get_seat_availability function. For the latter one, num_adults is required also. In this case, LLM will identify both these function calls and give corresponding parameters in step 1.

Standardization

The tool definition JSON (refer fig.2 above) is not standardized across various LLMs. Each LLM company suggests their own format.

The LangChain framework, however, gives an abstraction for this format.

The descriptions given to each function and each parameter are very important. This helps LLM to choose the correct function.

Conclusion

Function call is the best way to empower our applications with the powers of LLMs. Using this feature, we can make our application work easily with natural language queries.

We can help!